While this fascinating paper is not about chemistry it could easily be applied to chemical problems without further modifications (except for graph convolution), so I feel justified in highlighting it here.

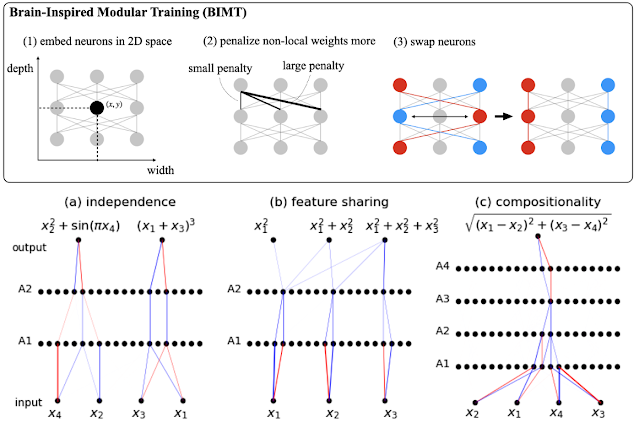

The paper introduces brain-inspired modular training (BIMT) which leads to relatively simple NNs that are easier to interpret. "Brain-inspired" comes from the fact that the brain is not fully connected like most NNs, since it is a 3D entity with physical connections (axons) and longer axons mean slower communication between neurons. The idea is to enforce this modularity during trainings by assigning positions to individual nodes and introducing a length-dependent penalty in the loss function (in addition to conventional L1 regularisation). This is combined with a swap operation that can swap neurons to decrease the loss.

The result is much simpler networks that, at least for relatively simple objectives, are intuitive and easier to interpret as you can see from the figure above.

The code is available here (Google Colab version) It would be very interesting to apply this to chemical problems!

This work is licensed under a Creative Commons Attribution 4.0 International License.