Rafael Gómez-Bombarelli, David Duvenaud, José Miguel Hernández-Lobato, Jorge Aguilera-Iparraguirre, Timothy D. Hirzel, Ryan P. Adams, and Alán Aspuru-Guzik (2016)

Contributed by Jan Jensen

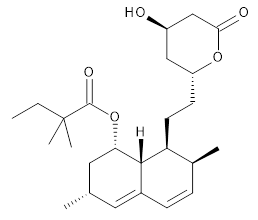

Chemical space is discrete which makes it hard to search with standard techniques such as gradient-based minimisation. This paper used a standard machine learning tool called an autoencoder to help solve that problem. One way to think of an autoencoder is as a data-compressor where one neural network is trained to describe a data set such as an image in some compressed representation and another network is trained to recover the image from the compressed format.

The interesting thing in the context of chemical space is that the compressed format can be a continuous function such as a real-valued vector (latent space). (Another use of autoencoders is dimensionality reduction for data visualization, e.g. as an alternative to principal component analysis.) This latent space is therefore a continuous representation of the chemical space (a set of SMILES strings) that the autoencoder was trained on. Another neural net can then be trained to map some chemical property, such as logP values, on this latent space and the space can be searched for regions with desired logP values with techniques as simple as interpolation.

One problem with autoencoders is that they are "lossy" which in this case translates to the fact that not all points in latent space can be decoded to a valid molecule (SMILES string) but the failure rate is relatively low for the two proof-of-concept applications in the paper.

This is a very interesting new tool in the hunt for molecules with new properties.

This work is licensed under a Creative Commons Attribution 4.0

This work is licensed under a Creative Commons Attribution 4.0